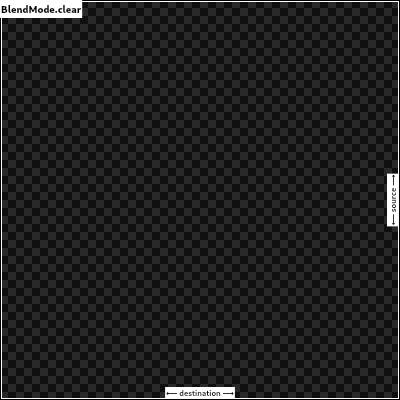

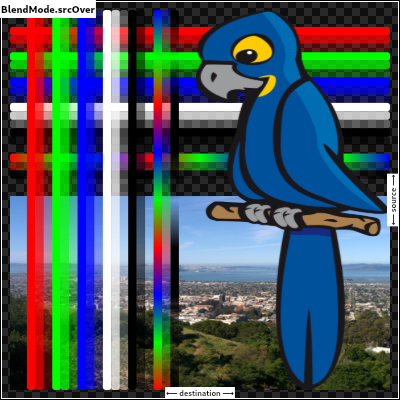

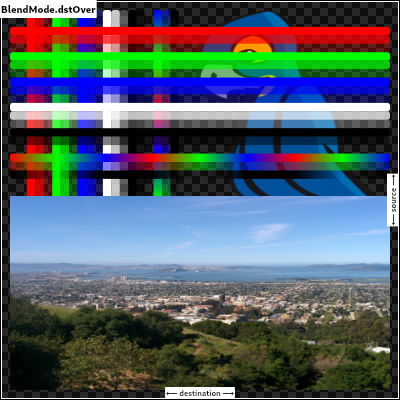

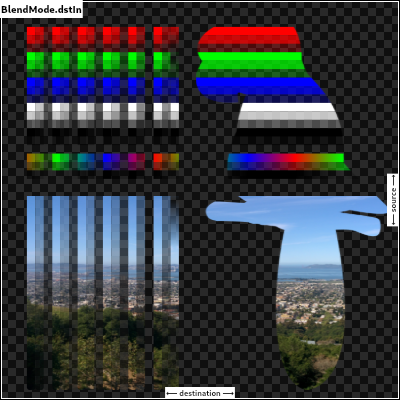

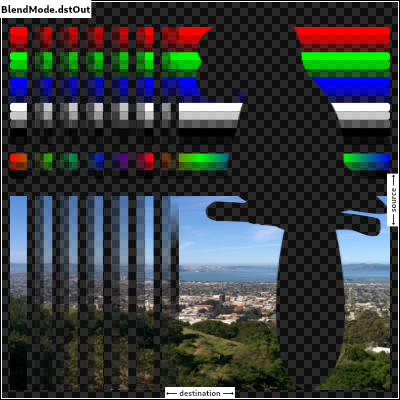

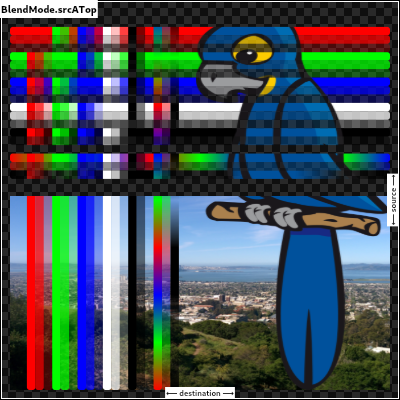

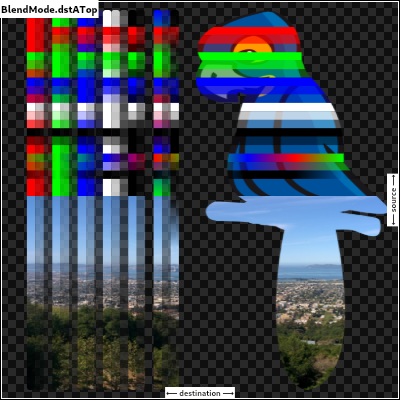

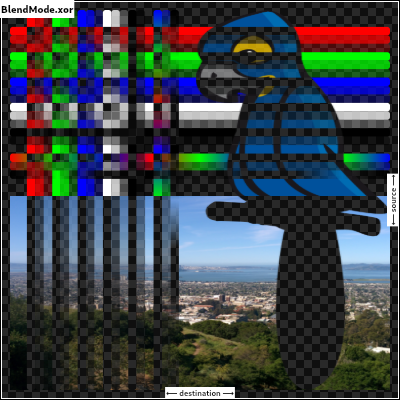

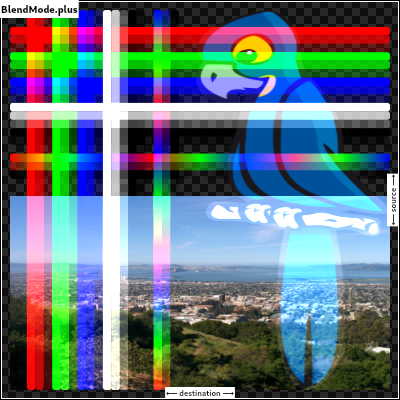

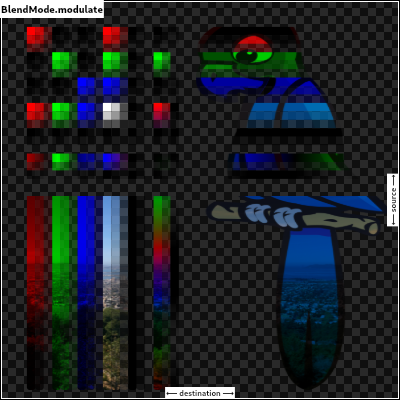

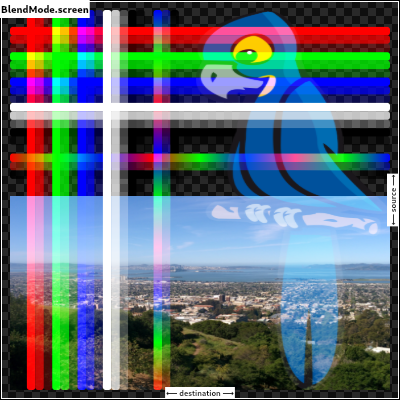

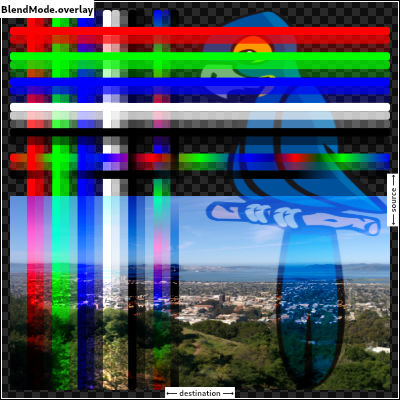

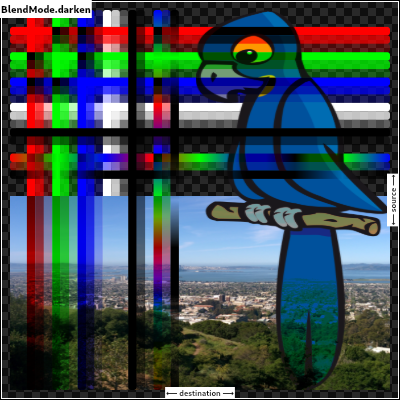

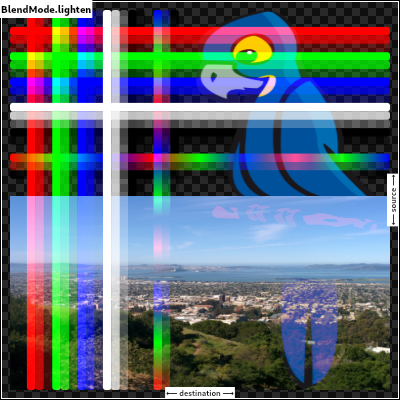

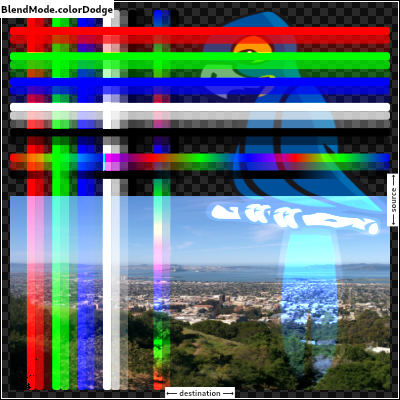

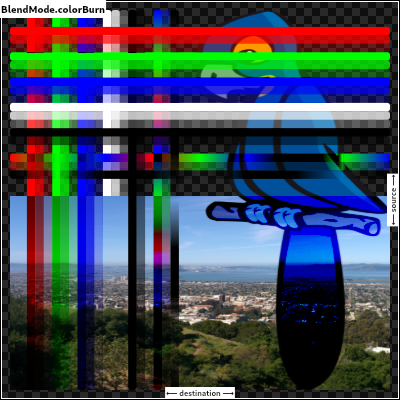

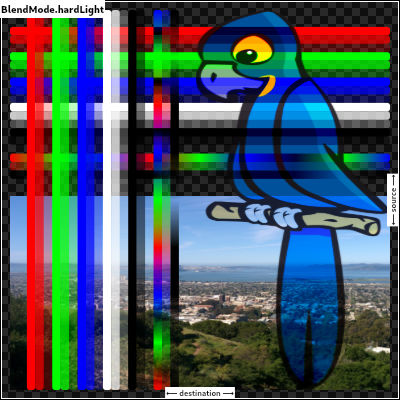

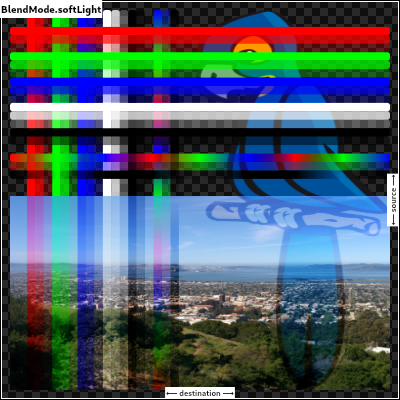

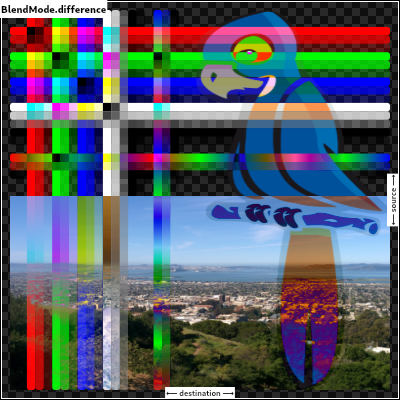

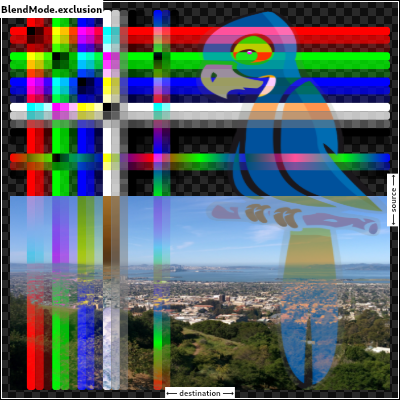

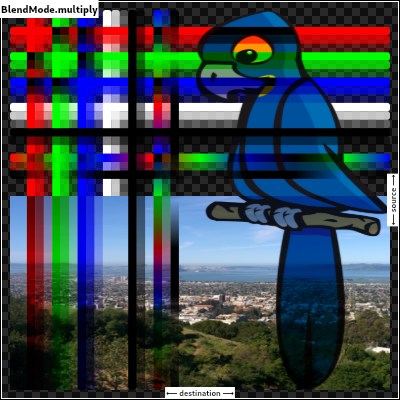

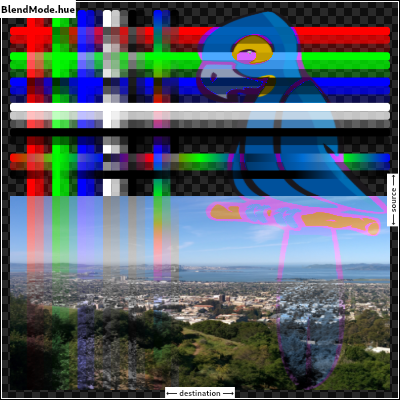

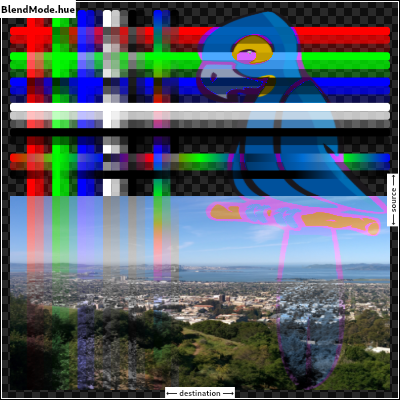

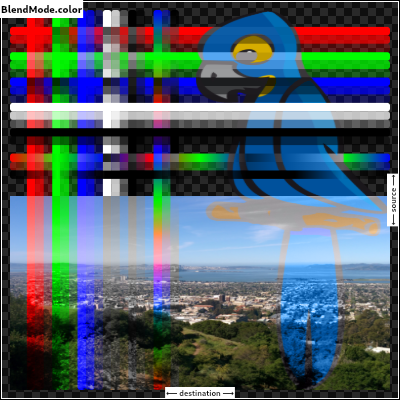

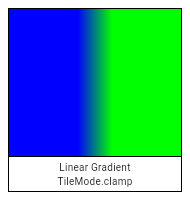

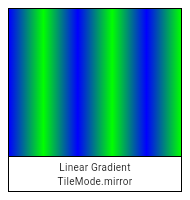

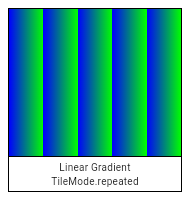

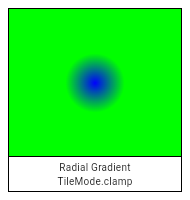

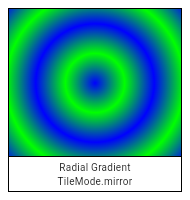

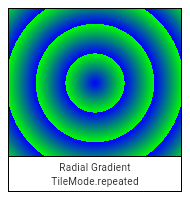

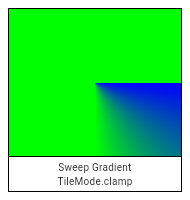

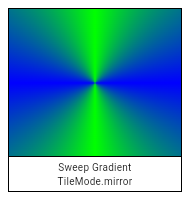

1// Copyright 2013 The Flutter Authors. All rights reserved. 2// Use of this source code is governed by a BSD-style license that can be 3// found in the LICENSE file. 4 5part of ui; 6 7bool _offsetIsValid(Offset offset) { 8 assert(offset != null, 'Offset argument was null.'); 9 assert(!offset.dx.isNaN && !offset.dy.isNaN, 10 'Offset argument contained a NaN value.'); 11 return true; 12} 13 14bool _matrix4IsValid(Float64List matrix4) { 15 assert(matrix4 != null, 'Matrix4 argument was null.'); 16 assert(matrix4.length == 16, 'Matrix4 must have 16 entries.'); 17 return true; 18} 19 20Color _scaleAlpha(Color a, double factor) { 21 return a.withAlpha((a.alpha * factor).round().clamp(0, 255)); 22} 23 24/// An immutable 32 bit color value in ARGB 25class Color { 26 /// Construct a color from the lower 32 bits of an int. 27 /// 28 /// Bits 24-31 are the alpha value. 29 /// Bits 16-23 are the red value. 30 /// Bits 8-15 are the green value. 31 /// Bits 0-7 are the blue value. 32 const Color(int value) : _value = value & 0xFFFFFFFF; 33 34 /// Construct a color from the lower 8 bits of four integers. 35 const Color.fromARGB(int a, int r, int g, int b) 36 : _value = (((a & 0xff) << 24) | 37 ((r & 0xff) << 16) | 38 ((g & 0xff) << 8) | 39 ((b & 0xff) << 0)) & 40 0xFFFFFFFF; 41 42 /// Create a color from red, green, blue, and opacity, similar to `rgba()` in CSS. 43 /// 44 /// * `r` is [red], from 0 to 255. 45 /// * `g` is [green], from 0 to 255. 46 /// * `b` is [blue], from 0 to 255. 47 /// * `opacity` is alpha channel of this color as a double, with 0.0 being 48 /// transparent and 1.0 being fully opaque. 49 /// 50 /// Out of range values are brought into range using modulo 255. 51 /// 52 /// See also [fromARGB], which takes the opacity as an integer value. 53 const Color.fromRGBO(int r, int g, int b, double opacity) 54 : _value = ((((opacity * 0xff ~/ 1) & 0xff) << 24) | 55 ((r & 0xff) << 16) | 56 ((g & 0xff) << 8) | 57 ((b & 0xff) << 0)) & 58 0xFFFFFFFF; 59 60 /// A 32 bit value representing this color. 61 /// 62 /// Bits 24-31 are the alpha value. 63 /// Bits 16-23 are the red value. 64 /// Bits 8-15 are the green value. 65 /// Bits 0-7 are the blue value. 66 int get value => _value; 67 final int _value; 68 69 /// The alpha channel of this color in an 8 bit value. 70 int get alpha => (0xff000000 & _value) >> 24; 71 72 /// The alpha channel of this color as a double. 73 double get opacity => alpha / 0xFF; 74 75 /// The red channel of this color in an 8 bit value. 76 int get red => (0x00ff0000 & _value) >> 16; 77 78 /// The green channel of this color in an 8 bit value. 79 int get green => (0x0000ff00 & _value) >> 8; 80 81 /// The blue channel of this color in an 8 bit value. 82 int get blue => (0x000000ff & _value) >> 0; 83 84 /// Returns a new color that matches this color with the alpha channel 85 /// replaced with a (which ranges from 0 to 255). 86 Color withAlpha(int a) { 87 return Color.fromARGB(a, red, green, blue); 88 } 89 90 /// Returns a new color that matches this color with the alpha channel 91 /// replaced with the given opacity (which ranges from 0.0 to 1.0). 92 Color withOpacity(double opacity) { 93 assert(opacity >= 0.0 && opacity <= 1.0); 94 return withAlpha((255.0 * opacity).round()); 95 } 96 97 /// Returns a new color that matches this color with the red channel replaced 98 /// with r. 99 Color withRed(int r) { 100 return Color.fromARGB(alpha, r, green, blue); 101 } 102 103 /// Returns a new color that matches this color with the green channel 104 /// replaced with g. 105 Color withGreen(int g) { 106 return Color.fromARGB(alpha, red, g, blue); 107 } 108 109 /// Returns a new color that matches this color with the blue channel replaced 110 /// with b. 111 Color withBlue(int b) { 112 return Color.fromARGB(alpha, red, green, b); 113 } 114 115 // See <https://www.w3.org/TR/WCAG20/#relativeluminancedef> 116 static double _linearizeColorComponent(double component) { 117 if (component <= 0.03928) { 118 return component / 12.92; 119 } 120 return math.pow((component + 0.055) / 1.055, 2.4); 121 } 122 123 /// Returns a brightness value between 0 for darkest and 1 for lightest. 124 /// 125 /// Represents the relative luminance of the color. This value is 126 /// computationally expensive to calculate. 127 /// 128 /// See <https://en.wikipedia.org/wiki/Relative_luminance>. 129 double computeLuminance() { 130 // See <https://www.w3.org/TR/WCAG20/#relativeluminancedef> 131 final double R = _linearizeColorComponent(red / 0xFF); 132 final double G = _linearizeColorComponent(green / 0xFF); 133 final double B = _linearizeColorComponent(blue / 0xFF); 134 return 0.2126 * R + 0.7152 * G + 0.0722 * B; 135 } 136 137 /// Linearly interpolate between two colors. 138 /// 139 /// This is intended to be fast but as a result may be ugly. Consider 140 /// [HSVColor] or writing custom logic for interpolating colors. 141 /// 142 /// If either color is null, this function linearly interpolates from a 143 /// transparent instance of the other color. This is usually preferable to 144 /// interpolating from [material.Colors.transparent] (`const 145 /// Color(0x00000000)`), which is specifically transparent _black_. 146 /// 147 /// The `t` argument represents position on the timeline, with 0.0 meaning 148 /// that the interpolation has not started, returning `a` (or something 149 /// equivalent to `a`), 1.0 meaning that the interpolation has finished, 150 /// returning `b` (or something equivalent to `b`), and values in between 151 /// meaning that the interpolation is at the relevant point on the timeline 152 /// between `a` and `b`. The interpolation can be extrapolated beyond 0.0 and 153 /// 1.0, so negative values and values greater than 1.0 are valid (and can 154 /// easily be generated by curves such as [Curves.elasticInOut]). Each channel 155 /// will be clamped to the range 0 to 255. 156 /// 157 /// Values for `t` are usually obtained from an [Animation<double>], such as 158 /// an [AnimationController]. 159 static Color lerp(Color a, Color b, double t) { 160 assert(t != null); 161 if (a == null && b == null) { 162 return null; 163 } 164 if (a == null) { 165 return _scaleAlpha(b, t); 166 } 167 if (b == null) { 168 return _scaleAlpha(a, 1.0 - t); 169 } 170 return Color.fromARGB( 171 lerpDouble(a.alpha, b.alpha, t).toInt().clamp(0, 255), 172 lerpDouble(a.red, b.red, t).toInt().clamp(0, 255), 173 lerpDouble(a.green, b.green, t).toInt().clamp(0, 255), 174 lerpDouble(a.blue, b.blue, t).toInt().clamp(0, 255), 175 ); 176 } 177 178 /// Combine the foreground color as a transparent color over top 179 /// of a background color, and return the resulting combined color. 180 /// 181 /// This uses standard alpha blending ("SRC over DST") rules to produce a 182 /// blended color from two colors. This can be used as a performance 183 /// enhancement when trying to avoid needless alpha blending compositing 184 /// operations for two things that are solid colors with the same shape, but 185 /// overlay each other: instead, just paint one with the combined color. 186 static Color alphaBlend(Color foreground, Color background) { 187 final int alpha = foreground.alpha; 188 if (alpha == 0x00) { 189 // Foreground completely transparent. 190 return background; 191 } 192 final int invAlpha = 0xff - alpha; 193 int backAlpha = background.alpha; 194 if (backAlpha == 0xff) { 195 // Opaque background case 196 return Color.fromARGB( 197 0xff, 198 (alpha * foreground.red + invAlpha * background.red) ~/ 0xff, 199 (alpha * foreground.green + invAlpha * background.green) ~/ 0xff, 200 (alpha * foreground.blue + invAlpha * background.blue) ~/ 0xff, 201 ); 202 } else { 203 // General case 204 backAlpha = (backAlpha * invAlpha) ~/ 0xff; 205 final int outAlpha = alpha + backAlpha; 206 assert(outAlpha != 0x00); 207 return Color.fromARGB( 208 outAlpha, 209 (foreground.red * alpha + background.red * backAlpha) ~/ outAlpha, 210 (foreground.green * alpha + background.green * backAlpha) ~/ outAlpha, 211 (foreground.blue * alpha + background.blue * backAlpha) ~/ outAlpha, 212 ); 213 } 214 } 215 216 @override 217 bool operator ==(dynamic other) { 218 if (identical(this, other)) { 219 return true; 220 } 221 if (other.runtimeType != runtimeType) { 222 return false; 223 } 224 final Color typedOther = other; 225 return value == typedOther.value; 226 } 227 228 @override 229 int get hashCode => _value.hashCode; 230 231 /// Converts color to a css compatible attribute value. 232 // webOnly 233 String toCssString() { 234 if ((0xff000000 & _value) == 0xff000000) { 235 return toCssStringRgbOnly(); 236 } else { 237 final double alpha = ((_value >> 24) & 0xFF) / 255.0; 238 final StringBuffer sb = StringBuffer(); 239 sb.write('rgba('); 240 sb.write(((_value >> 16) & 0xFF).toString()); 241 sb.write(','); 242 sb.write(((_value >> 8) & 0xFF).toString()); 243 sb.write(','); 244 sb.write((_value & 0xFF).toString()); 245 sb.write(','); 246 sb.write(alpha.toString()); 247 sb.write(')'); 248 return sb.toString(); 249 } 250 } 251 252 /// Returns the CSS value of this color without the alpha component. 253 /// 254 /// This is useful when painting shadows as on the Web shadow opacity combines 255 /// with the paint opacity. 256 // webOnly 257 String toCssStringRgbOnly() { 258 final String paddedValue = '00000${_value.toRadixString(16)}'; 259 return '#${paddedValue.substring(paddedValue.length - 6)}'; 260 } 261 262 @override 263 String toString() { 264 if (engine.assertionsEnabled) { 265 return 'Color(0x${value.toRadixString(16).padLeft(8, '0')})'; 266 } else { 267 return super.toString(); 268 } 269 } 270} 271 272/// Styles to use for line endings. 273/// 274/// See [Paint.strokeCap]. 275enum StrokeCap { 276 /// Begin and end contours with a flat edge and no extension. 277 butt, 278 279 /// Begin and end contours with a semi-circle extension. 280 round, 281 282 /// Begin and end contours with a half square extension. This is 283 /// similar to extending each contour by half the stroke width (as 284 /// given by [Paint.strokeWidth]). 285 square, 286} 287 288/// Styles to use for line segment joins. 289/// 290/// This only affects line joins for polygons drawn by [Canvas.drawPath] and 291/// rectangles, not points drawn as lines with [Canvas.drawPoints]. 292/// 293/// See also: 294/// 295/// * [Paint.strokeJoin] and [Paint.strokeMiterLimit] for how this value is 296/// used. 297/// * [StrokeCap] for the different kinds of line endings. 298// These enum values must be kept in sync with SkPaint::Join. 299enum StrokeJoin { 300 /// Joins between line segments form sharp corners. 301 /// 302 /// {@animation 300 300 https://flutter.github.io/assets-for-api-docs/assets/dart-ui/miter_4_join.mp4} 303 /// 304 /// The center of the line segment is colored in the diagram above to 305 /// highlight the join, but in normal usage the join is the same color as the 306 /// line. 307 /// 308 /// See also: 309 /// 310 /// * [Paint.strokeJoin], used to set the line segment join style to this 311 /// value. 312 /// * [Paint.strokeMiterLimit], used to define when a miter is drawn instead 313 /// of a bevel when the join is set to this value. 314 miter, 315 316 /// Joins between line segments are semi-circular. 317 /// 318 /// {@animation 300 300 https://flutter.github.io/assets-for-api-docs/assets/dart-ui/round_join.mp4} 319 /// 320 /// The center of the line segment is colored in the diagram above to 321 /// highlight the join, but in normal usage the join is the same color as the 322 /// line. 323 /// 324 /// See also: 325 /// 326 /// * [Paint.strokeJoin], used to set the line segment join style to this 327 /// value. 328 round, 329 330 /// Joins between line segments connect the corners of the butt ends of the 331 /// line segments to give a beveled appearance. 332 /// 333 /// {@animation 300 300 https://flutter.github.io/assets-for-api-docs/assets/dart-ui/bevel_join.mp4} 334 /// 335 /// The center of the line segment is colored in the diagram above to 336 /// highlight the join, but in normal usage the join is the same color as the 337 /// line. 338 /// 339 /// See also: 340 /// 341 /// * [Paint.strokeJoin], used to set the line segment join style to this 342 /// value. 343 bevel, 344} 345 346/// Strategies for painting shapes and paths on a canvas. 347/// 348/// See [Paint.style]. 349enum PaintingStyle { 350 /// Apply the [Paint] to the inside of the shape. For example, when 351 /// applied to the [Paint.drawCircle] call, this results in a disc 352 /// of the given size being painted. 353 fill, 354 355 /// Apply the [Paint] to the edge of the shape. For example, when 356 /// applied to the [Paint.drawCircle] call, this results is a hoop 357 /// of the given size being painted. The line drawn on the edge will 358 /// be the width given by the [Paint.strokeWidth] property. 359 stroke, 360} 361 362/// Algorithms to use when painting on the canvas. 363/// 364/// When drawing a shape or image onto a canvas, different algorithms can be 365/// used to blend the pixels. The different values of [BlendMode] specify 366/// different such algorithms. 367/// 368/// Each algorithm has two inputs, the _source_, which is the image being drawn, 369/// and the _destination_, which is the image into which the source image is 370/// being composited. The destination is often thought of as the _background_. 371/// The source and destination both have four color channels, the red, green, 372/// blue, and alpha channels. These are typically represented as numbers in the 373/// range 0.0 to 1.0. The output of the algorithm also has these same four 374/// channels, with values computed from the source and destination. 375/// 376/// The documentation of each value below describes how the algorithm works. In 377/// each case, an image shows the output of blending a source image with a 378/// destination image. In the images below, the destination is represented by an 379/// image with horizontal lines and an opaque landscape photograph, and the 380/// source is represented by an image with vertical lines (the same lines but 381/// rotated) and a bird clip-art image. The [src] mode shows only the source 382/// image, and the [dst] mode shows only the destination image. In the 383/// documentation below, the transparency is illustrated by a checkerboard 384/// pattern. The [clear] mode drops both the source and destination, resulting 385/// in an output that is entirely transparent (illustrated by a solid 386/// checkerboard pattern). 387/// 388/// The horizontal and vertical bars in these images show the red, green, and 389/// blue channels with varying opacity levels, then all three color channels 390/// together with those same varying opacity levels, then all three color 391/// channels set to zero with those varying opacity levels, then two bars 392/// showing a red/green/blue repeating gradient, the first with full opacity and 393/// the second with partial opacity, and finally a bar with the three color 394/// channels set to zero but the opacity varying in a repeating gradient. 395/// 396/// ## Application to the [Canvas] API 397/// 398/// When using [Canvas.saveLayer] and [Canvas.restore], the blend mode of the 399/// [Paint] given to the [Canvas.saveLayer] will be applied when 400/// [Canvas.restore] is called. Each call to [Canvas.saveLayer] introduces a new 401/// layer onto which shapes and images are painted; when [Canvas.restore] is 402/// called, that layer is then composited onto the parent layer, with the source 403/// being the most-recently-drawn shapes and images, and the destination being 404/// the parent layer. (For the first [Canvas.saveLayer] call, the parent layer 405/// is the canvas itself.) 406/// 407/// See also: 408/// 409/// * [Paint.blendMode], which uses [BlendMode] to define the compositing 410/// strategy. 411enum BlendMode { 412 // This list comes from Skia's SkXfermode.h and the values (order) should be 413 // kept in sync. 414 // See: https://skia.org/user/api/skpaint#SkXfermode 415 416 /// Drop both the source and destination images, leaving nothing. 417 /// 418 /// This corresponds to the "clear" Porter-Duff operator. 419 /// 420 ///  421 clear, 422 423 /// Drop the destination image, only paint the source image. 424 /// 425 /// Conceptually, the destination is first cleared, then the source image is 426 /// painted. 427 /// 428 /// This corresponds to the "Copy" Porter-Duff operator. 429 /// 430 ///  431 src, 432 433 /// Drop the source image, only paint the destination image. 434 /// 435 /// Conceptually, the source image is discarded, leaving the destination 436 /// untouched. 437 /// 438 /// This corresponds to the "Destination" Porter-Duff operator. 439 /// 440 ///  441 dst, 442 443 /// Composite the source image over the destination image. 444 /// 445 /// This is the default value. It represents the most intuitive case, where 446 /// shapes are painted on top of what is below, with transparent areas showing 447 /// the destination layer. 448 /// 449 /// This corresponds to the "Source over Destination" Porter-Duff operator, 450 /// also known as the Painter's Algorithm. 451 /// 452 ///  453 srcOver, 454 455 /// Composite the source image under the destination image. 456 /// 457 /// This is the opposite of [srcOver]. 458 /// 459 /// This corresponds to the "Destination over Source" Porter-Duff operator. 460 /// 461 ///  462 /// 463 /// This is useful when the source image should have been painted before the 464 /// destination image, but could not be. 465 dstOver, 466 467 /// Show the source image, but only where the two images overlap. The 468 /// destination image is not rendered, it is treated merely as a mask. The 469 /// color channels of the destination are ignored, only the opacity has an 470 /// effect. 471 /// 472 /// To show the destination image instead, consider [dstIn]. 473 /// 474 /// To reverse the semantic of the mask (only showing the source where the 475 /// destination is absent, rather than where it is present), consider 476 /// [srcOut]. 477 /// 478 /// This corresponds to the "Source in Destination" Porter-Duff operator. 479 /// 480 ///  481 srcIn, 482 483 /// Show the destination image, but only where the two images overlap. The 484 /// source image is not rendered, it is treated merely as a mask. The color 485 /// channels of the source are ignored, only the opacity has an effect. 486 /// 487 /// To show the source image instead, consider [srcIn]. 488 /// 489 /// To reverse the semantic of the mask (only showing the source where the 490 /// destination is present, rather than where it is absent), consider 491 /// [dstOut]. 492 /// 493 /// This corresponds to the "Destination in Source" Porter-Duff operator. 494 /// 495 ///  496 dstIn, 497 498 /// Show the source image, but only where the two images do not overlap. The 499 /// destination image is not rendered, it is treated merely as a mask. The color 500 /// channels of the destination are ignored, only the opacity has an effect. 501 /// 502 /// To show the destination image instead, consider [dstOut]. 503 /// 504 /// To reverse the semantic of the mask (only showing the source where the 505 /// destination is present, rather than where it is absent), consider [srcIn]. 506 /// 507 /// This corresponds to the "Source out Destination" Porter-Duff operator. 508 /// 509 ///  510 srcOut, 511 512 /// Show the destination image, but only where the two images do not overlap. 513 /// The source image is not rendered, it is treated merely as a mask. The 514 /// color channels of the source are ignored, only the opacity has an effect. 515 /// 516 /// To show the source image instead, consider [srcOut]. 517 /// 518 /// To reverse the semantic of the mask (only showing the destination where 519 /// the source is present, rather than where it is absent), consider [dstIn]. 520 /// 521 /// This corresponds to the "Destination out Source" Porter-Duff operator. 522 /// 523 ///  524 dstOut, 525 526 /// Composite the source image over the destination image, but only where it 527 /// overlaps the destination. 528 /// 529 /// This corresponds to the "Source atop Destination" Porter-Duff operator. 530 /// 531 /// This is essentially the [srcOver] operator, but with the output's opacity 532 /// channel being set to that of the destination image instead of being a 533 /// combination of both image's opacity channels. 534 /// 535 /// For a variant with the destination on top instead of the source, see 536 /// [dstATop]. 537 /// 538 ///  539 srcATop, 540 541 /// Composite the destination image over the source image, but only where it 542 /// overlaps the source. 543 /// 544 /// This corresponds to the "Destination atop Source" Porter-Duff operator. 545 /// 546 /// This is essentially the [dstOver] operator, but with the output's opacity 547 /// channel being set to that of the source image instead of being a 548 /// combination of both image's opacity channels. 549 /// 550 /// For a variant with the source on top instead of the destination, see 551 /// [srcATop]. 552 /// 553 ///  554 dstATop, 555 556 /// Apply a bitwise `xor` operator to the source and destination images. This 557 /// leaves transparency where they would overlap. 558 /// 559 /// This corresponds to the "Source xor Destination" Porter-Duff operator. 560 /// 561 ///  562 xor, 563 564 /// Sum the components of the source and destination images. 565 /// 566 /// Transparency in a pixel of one of the images reduces the contribution of 567 /// that image to the corresponding output pixel, as if the color of that 568 /// pixel in that image was darker. 569 /// 570 /// This corresponds to the "Source plus Destination" Porter-Duff operator. 571 /// 572 ///  573 plus, 574 575 /// Multiply the color components of the source and destination images. 576 /// 577 /// This can only result in the same or darker colors (multiplying by white, 578 /// 1.0, results in no change; multiplying by black, 0.0, results in black). 579 /// 580 /// When compositing two opaque images, this has similar effect to overlapping 581 /// two transparencies on a projector. 582 /// 583 /// For a variant that also multiplies the alpha channel, consider [multiply]. 584 /// 585 ///  586 /// 587 /// See also: 588 /// 589 /// * [screen], which does a similar computation but inverted. 590 /// * [overlay], which combines [modulate] and [screen] to favor the 591 /// destination image. 592 /// * [hardLight], which combines [modulate] and [screen] to favor the 593 /// source image. 594 modulate, 595 596 // Following blend modes are defined in the CSS Compositing standard. 597 598 /// Multiply the inverse of the components of the source and destination 599 /// images, and inverse the result. 600 /// 601 /// Inverting the components means that a fully saturated channel (opaque 602 /// white) is treated as the value 0.0, and values normally treated as 0.0 603 /// (black, transparent) are treated as 1.0. 604 /// 605 /// This is essentially the same as [modulate] blend mode, but with the values 606 /// of the colors inverted before the multiplication and the result being 607 /// inverted back before rendering. 608 /// 609 /// This can only result in the same or lighter colors (multiplying by black, 610 /// 1.0, results in no change; multiplying by white, 0.0, results in white). 611 /// Similarly, in the alpha channel, it can only result in more opaque colors. 612 /// 613 /// This has similar effect to two projectors displaying their images on the 614 /// same screen simultaneously. 615 /// 616 ///  617 /// 618 /// See also: 619 /// 620 /// * [modulate], which does a similar computation but without inverting the 621 /// values. 622 /// * [overlay], which combines [modulate] and [screen] to favor the 623 /// destination image. 624 /// * [hardLight], which combines [modulate] and [screen] to favor the 625 /// source image. 626 screen, // The last coeff mode. 627 628 /// Multiply the components of the source and destination images after 629 /// adjusting them to favor the destination. 630 /// 631 /// Specifically, if the destination value is smaller, this multiplies it with 632 /// the source value, whereas is the source value is smaller, it multiplies 633 /// the inverse of the source value with the inverse of the destination value, 634 /// then inverts the result. 635 /// 636 /// Inverting the components means that a fully saturated channel (opaque 637 /// white) is treated as the value 0.0, and values normally treated as 0.0 638 /// (black, transparent) are treated as 1.0. 639 /// 640 ///  641 /// 642 /// See also: 643 /// 644 /// * [modulate], which always multiplies the values. 645 /// * [screen], which always multiplies the inverses of the values. 646 /// * [hardLight], which is similar to [overlay] but favors the source image 647 /// instead of the destination image. 648 overlay, 649 650 /// Composite the source and destination image by choosing the lowest value 651 /// from each color channel. 652 /// 653 /// The opacity of the output image is computed in the same way as for 654 /// [srcOver]. 655 /// 656 ///  657 darken, 658 659 /// Composite the source and destination image by choosing the highest value 660 /// from each color channel. 661 /// 662 /// The opacity of the output image is computed in the same way as for 663 /// [srcOver]. 664 /// 665 ///  666 lighten, 667 668 /// Divide the destination by the inverse of the source. 669 /// 670 /// Inverting the components means that a fully saturated channel (opaque 671 /// white) is treated as the value 0.0, and values normally treated as 0.0 672 /// (black, transparent) are treated as 1.0. 673 /// 674 ///  675 colorDodge, 676 677 /// Divide the inverse of the destination by the the source, and inverse the result. 678 /// 679 /// Inverting the components means that a fully saturated channel (opaque 680 /// white) is treated as the value 0.0, and values normally treated as 0.0 681 /// (black, transparent) are treated as 1.0. 682 /// 683 ///  684 colorBurn, 685 686 /// Multiply the components of the source and destination images after 687 /// adjusting them to favor the source. 688 /// 689 /// Specifically, if the source value is smaller, this multiplies it with the 690 /// destination value, whereas is the destination value is smaller, it 691 /// multiplies the inverse of the destination value with the inverse of the 692 /// source value, then inverts the result. 693 /// 694 /// Inverting the components means that a fully saturated channel (opaque 695 /// white) is treated as the value 0.0, and values normally treated as 0.0 696 /// (black, transparent) are treated as 1.0. 697 /// 698 ///  699 /// 700 /// See also: 701 /// 702 /// * [modulate], which always multiplies the values. 703 /// * [screen], which always multiplies the inverses of the values. 704 /// * [overlay], which is similar to [hardLight] but favors the destination 705 /// image instead of the source image. 706 hardLight, 707 708 /// Use [colorDodge] for source values below 0.5 and [colorBurn] for source 709 /// values above 0.5. 710 /// 711 /// This results in a similar but softer effect than [overlay]. 712 /// 713 ///  714 /// 715 /// See also: 716 /// 717 /// * [color], which is a more subtle tinting effect. 718 softLight, 719 720 /// Subtract the smaller value from the bigger value for each channel. 721 /// 722 /// Compositing black has no effect; compositing white inverts the colors of 723 /// the other image. 724 /// 725 /// The opacity of the output image is computed in the same way as for 726 /// [srcOver]. 727 /// 728 /// The effect is similar to [exclusion] but harsher. 729 /// 730 ///  731 difference, 732 733 /// Subtract double the product of the two images from the sum of the two 734 /// images. 735 /// 736 /// Compositing black has no effect; compositing white inverts the colors of 737 /// the other image. 738 /// 739 /// The opacity of the output image is computed in the same way as for 740 /// [srcOver]. 741 /// 742 /// The effect is similar to [difference] but softer. 743 /// 744 ///  745 exclusion, 746 747 /// Multiply the components of the source and destination images, including 748 /// the alpha channel. 749 /// 750 /// This can only result in the same or darker colors (multiplying by white, 751 /// 1.0, results in no change; multiplying by black, 0.0, results in black). 752 /// 753 /// Since the alpha channel is also multiplied, a fully-transparent pixel 754 /// (opacity 0.0) in one image results in a fully transparent pixel in the 755 /// output. This is similar to [dstIn], but with the colors combined. 756 /// 757 /// For a variant that multiplies the colors but does not multiply the alpha 758 /// channel, consider [modulate]. 759 /// 760 ///  761 multiply, // The last separable mode. 762 763 /// Take the hue of the source image, and the saturation and luminosity of the 764 /// destination image. 765 /// 766 /// The effect is to tint the destination image with the source image. 767 /// 768 /// The opacity of the output image is computed in the same way as for 769 /// [srcOver]. Regions that are entirely transparent in the source image take 770 /// their hue from the destination. 771 /// 772 ///  773 /// 774 /// See also: 775 /// 776 /// * [color], which is a similar but stronger effect as it also applies the 777 /// saturation of the source image. 778 /// * [HSVColor], which allows colors to be expressed using Hue rather than 779 /// the red/green/blue channels of [Color]. 780 hue, 781 782 /// Take the saturation of the source image, and the hue and luminosity of the 783 /// destination image. 784 /// 785 /// The opacity of the output image is computed in the same way as for 786 /// [srcOver]. Regions that are entirely transparent in the source image take 787 /// their saturation from the destination. 788 /// 789 ///  790 /// 791 /// See also: 792 /// 793 /// * [color], which also applies the hue of the source image. 794 /// * [luminosity], which applies the luminosity of the source image to the 795 /// destination. 796 saturation, 797 798 /// Take the hue and saturation of the source image, and the luminosity of the 799 /// destination image. 800 /// 801 /// The effect is to tint the destination image with the source image. 802 /// 803 /// The opacity of the output image is computed in the same way as for 804 /// [srcOver]. Regions that are entirely transparent in the source image take 805 /// their hue and saturation from the destination. 806 /// 807 ///  808 /// 809 /// See also: 810 /// 811 /// * [hue], which is a similar but weaker effect. 812 /// * [softLight], which is a similar tinting effect but also tints white. 813 /// * [saturation], which only applies the saturation of the source image. 814 color, 815 816 /// Take the luminosity of the source image, and the hue and saturation of the 817 /// destination image. 818 /// 819 /// The opacity of the output image is computed in the same way as for 820 /// [srcOver]. Regions that are entirely transparent in the source image take 821 /// their luminosity from the destination. 822 /// 823 ///  824 /// 825 /// See also: 826 /// 827 /// * [saturation], which applies the saturation of the source image to the 828 /// destination. 829 /// * [ImageFilter.blur], which can be used with [BackdropFilter] for a 830 /// related effect. 831 luminosity, 832} 833 834/// Different ways to clip a widget's content. 835enum Clip { 836 /// No clip at all. 837 /// 838 /// This is the default option for most widgets: if the content does not 839 /// overflow the widget boundary, don't pay any performance cost for clipping. 840 /// 841 /// If the content does overflow, please explicitly specify the following 842 /// [Clip] options: 843 /// * [hardEdge], which is the fastest clipping, but with lower fidelity. 844 /// * [antiAlias], which is a little slower than [hardEdge], but with smoothed edges. 845 /// * [antiAliasWithSaveLayer], which is much slower than [antiAlias], and should 846 /// rarely be used. 847 none, 848 849 /// Clip, but do not apply anti-aliasing. 850 /// 851 /// This mode enables clipping, but curves and non-axis-aligned straight lines will be 852 /// jagged as no effort is made to anti-alias. 853 /// 854 /// Faster than other clipping modes, but slower than [none]. 855 /// 856 /// This is a reasonable choice when clipping is needed, if the container is an axis- 857 /// aligned rectangle or an axis-aligned rounded rectangle with very small corner radii. 858 /// 859 /// See also: 860 /// 861 /// * [antiAlias], which is more reasonable when clipping is needed and the shape is not 862 /// an axis-aligned rectangle. 863 hardEdge, 864 865 /// Clip with anti-aliasing. 866 /// 867 /// This mode has anti-aliased clipping edges to achieve a smoother look. 868 /// 869 /// It' s much faster than [antiAliasWithSaveLayer], but slower than [hardEdge]. 870 /// 871 /// This will be the common case when dealing with circles and arcs. 872 /// 873 /// Different from [hardEdge] and [antiAliasWithSaveLayer], this clipping may have 874 /// bleeding edge artifacts. 875 /// (See https://fiddle.skia.org/c/21cb4c2b2515996b537f36e7819288ae for an example.) 876 /// 877 /// See also: 878 /// 879 /// * [hardEdge], which is a little faster, but with lower fidelity. 880 /// * [antiAliasWithSaveLayer], which is much slower, but can avoid the 881 /// bleeding edges if there's no other way. 882 /// * [Paint.isAntiAlias], which is the anti-aliasing switch for general draw operations. 883 antiAlias, 884 885 /// Clip with anti-aliasing and saveLayer immediately following the clip. 886 /// 887 /// This mode not only clips with anti-aliasing, but also allocates an offscreen 888 /// buffer. All subsequent paints are carried out on that buffer before finally 889 /// being clipped and composited back. 890 /// 891 /// This is very slow. It has no bleeding edge artifacts (that [antiAlias] has) 892 /// but it changes the semantics as an offscreen buffer is now introduced. 893 /// (See https://github.com/flutter/flutter/issues/18057#issuecomment-394197336 894 /// for a difference between paint without saveLayer and paint with saveLayer.) 895 /// 896 /// This will be only rarely needed. One case where you might need this is if 897 /// you have an image overlaid on a very different background color. In these 898 /// cases, consider whether you can avoid overlaying multiple colors in one 899 /// spot (e.g. by having the background color only present where the image is 900 /// absent). If you can, [antiAlias] would be fine and much faster. 901 /// 902 /// See also: 903 /// 904 /// * [antiAlias], which is much faster, and has similar clipping results. 905 antiAliasWithSaveLayer, 906} 907 908/// Private Paint context data used for recording canvas commands allowing 909/// Paint to be mutated post canvas draw operations. 910class PaintData { 911 BlendMode blendMode; 912 PaintingStyle style; 913 double strokeWidth; 914 StrokeCap strokeCap; 915 StrokeJoin strokeJoin; 916 bool isAntiAlias = true; 917 Color color; 918 Shader shader; 919 MaskFilter maskFilter; 920 FilterQuality filterQuality; 921 ColorFilter colorFilter; 922 923 // Internal for recording canvas use. 924 PaintData clone() { 925 return PaintData() 926 ..blendMode = blendMode 927 ..filterQuality = filterQuality 928 ..maskFilter = maskFilter 929 ..shader = shader 930 ..isAntiAlias = isAntiAlias 931 ..color = color 932 ..colorFilter = colorFilter 933 ..strokeWidth = strokeWidth 934 ..style = style 935 ..strokeJoin = strokeJoin 936 ..strokeCap = strokeCap; 937 } 938} 939 940/// A description of the style to use when drawing on a [Canvas]. 941/// 942/// Most APIs on [Canvas] take a [Paint] object to describe the style 943/// to use for that operation. 944class Paint { 945 PaintData _paintData = PaintData(); 946 947 /// A blend mode to apply when a shape is drawn or a layer is composited. 948 /// 949 /// The source colors are from the shape being drawn (e.g. from 950 /// [Canvas.drawPath]) or layer being composited (the graphics that were drawn 951 /// between the [Canvas.saveLayer] and [Canvas.restore] calls), after applying 952 /// the [colorFilter], if any. 953 /// 954 /// The destination colors are from the background onto which the shape or 955 /// layer is being composited. 956 /// 957 /// Defaults to [BlendMode.srcOver]. 958 /// 959 /// See also: 960 /// 961 /// * [Canvas.saveLayer], which uses its [Paint]'s [blendMode] to composite 962 /// the layer when [restore] is called. 963 /// * [BlendMode], which discusses the user of [saveLayer] with [blendMode]. 964 BlendMode get blendMode => _paintData.blendMode ?? BlendMode.srcOver; 965 set blendMode(BlendMode value) { 966 if (_frozen) { 967 _paintData = _paintData.clone(); 968 _frozen = false; 969 } 970 _paintData.blendMode = value; 971 } 972 973 BlendMode _blendMode; 974 975 /// Whether to paint inside shapes, the edges of shapes, or both. 976 /// 977 /// If null, defaults to [PaintingStyle.fill]. 978 PaintingStyle get style => _paintData.style ?? PaintingStyle.fill; 979 set style(PaintingStyle value) { 980 if (_frozen) { 981 _paintData = _paintData.clone(); 982 _frozen = false; 983 } 984 _paintData.style = value; 985 } 986 987 /// How wide to make edges drawn when [style] is set to 988 /// [PaintingStyle.stroke] or [PaintingStyle.strokeAndFill]. The 989 /// width is given in logical pixels measured in the direction 990 /// orthogonal to the direction of the path. 991 /// 992 /// The values null and 0.0 correspond to a hairline width. 993 double get strokeWidth => _paintData.strokeWidth ?? 0.0; 994 set strokeWidth(double value) { 995 if (_frozen) { 996 _paintData = _paintData.clone(); 997 _frozen = false; 998 } 999 _paintData.strokeWidth = value; 1000 } 1001 1002 /// The kind of finish to place on the end of lines drawn when 1003 /// [style] is set to [PaintingStyle.stroke] or 1004 /// [PaintingStyle.strokeAndFill]. 1005 /// 1006 /// If null, defaults to [StrokeCap.butt], i.e. no caps. 1007 StrokeCap get strokeCap => _paintData.strokeCap; 1008 set strokeCap(StrokeCap value) { 1009 if (_frozen) { 1010 _paintData = _paintData.clone(); 1011 _frozen = false; 1012 } 1013 _paintData.strokeCap = value; 1014 } 1015 1016 /// The kind of finish to use for line segment joins. 1017 /// [style] is set to [PaintingStyle.stroke] or 1018 /// [PaintingStyle.strokeAndFill]. Only applies to drawPath not drawPoints. 1019 /// 1020 /// If null, defaults to [StrokeCap.butt], i.e. no caps. 1021 StrokeJoin get strokeJoin => _paintData.strokeJoin; 1022 set strokeJoin(StrokeJoin value) { 1023 if (_frozen) { 1024 _paintData = _paintData.clone(); 1025 _frozen = false; 1026 } 1027 _paintData.strokeJoin = value; 1028 } 1029 1030 /// Whether to apply anti-aliasing to lines and images drawn on the 1031 /// canvas. 1032 /// 1033 /// Defaults to true. The value null is treated as false. 1034 bool get isAntiAlias => _paintData.isAntiAlias; 1035 set isAntiAlias(bool value) { 1036 if (_frozen) { 1037 _paintData = _paintData.clone(); 1038 _frozen = false; 1039 } 1040 _paintData.isAntiAlias = value; 1041 } 1042 1043 Color get color => _paintData.color; 1044 set color(Color value) { 1045 if (_frozen) { 1046 _paintData = _paintData.clone(); 1047 _frozen = false; 1048 } 1049 _paintData.color = value; 1050 } 1051 1052 /// Whether the colors of the image are inverted when drawn. 1053 /// 1054 /// Inverting the colors of an image applies a new color filter that will 1055 /// be composed with any user provided color filters. This is primarily 1056 /// used for implementing smart invert on iOS. 1057 bool get invertColors { 1058 return false; 1059 } 1060 1061 set invertColors(bool value) {} 1062 1063 Color _color = _defaultPaintColor; 1064 static const Color _defaultPaintColor = Color(0xFF000000); 1065 1066 /// The shader to use when stroking or filling a shape. 1067 /// 1068 /// When this is null, the [color] is used instead. 1069 /// 1070 /// See also: 1071 /// 1072 /// * [Gradient], a shader that paints a color gradient. 1073 /// * [ImageShader], a shader that tiles an [Image]. 1074 /// * [colorFilter], which overrides [shader]. 1075 /// * [color], which is used if [shader] and [colorFilter] are null. 1076 Shader get shader => _paintData.shader; 1077 set shader(Shader value) { 1078 if (_frozen) { 1079 _paintData = _paintData.clone(); 1080 _frozen = false; 1081 } 1082 _paintData.shader = value; 1083 } 1084 1085 /// A mask filter (for example, a blur) to apply to a shape after it has been 1086 /// drawn but before it has been composited into the image. 1087 /// 1088 /// See [MaskFilter] for details. 1089 MaskFilter get maskFilter => _paintData.maskFilter; 1090 set maskFilter(MaskFilter value) { 1091 if (_frozen) { 1092 _paintData = _paintData.clone(); 1093 _frozen = false; 1094 } 1095 _paintData.maskFilter = value; 1096 } 1097 1098 /// Controls the performance vs quality trade-off to use when applying 1099 /// filters, such as [maskFilter], or when drawing images, as with 1100 /// [Canvas.drawImageRect] or [Canvas.drawImageNine]. 1101 /// 1102 /// Defaults to [FilterQuality.none]. 1103 // TODO(ianh): verify that the image drawing methods actually respect this 1104 FilterQuality get filterQuality => _paintData.filterQuality; 1105 set filterQuality(FilterQuality value) { 1106 if (_frozen) { 1107 _paintData = _paintData.clone(); 1108 _frozen = false; 1109 } 1110 _paintData.filterQuality = value; 1111 } 1112 1113 /// A color filter to apply when a shape is drawn or when a layer is 1114 /// composited. 1115 /// 1116 /// See [ColorFilter] for details. 1117 /// 1118 /// When a shape is being drawn, [colorFilter] overrides [color] and [shader]. 1119 ColorFilter get colorFilter => _paintData.colorFilter; 1120 set colorFilter(ColorFilter value) { 1121 if (_frozen) { 1122 _paintData = _paintData.clone(); 1123 _frozen = false; 1124 } 1125 _paintData.colorFilter = value; 1126 } 1127 1128 // TODO(flutter_web): see https://github.com/flutter/flutter/issues/33605 1129 double get strokeMiterLimit { 1130 return null; 1131 } 1132 1133 set strokeMiterLimit(double value) { 1134 assert(value != null); 1135 } 1136 1137 /// The [ImageFilter] to use when drawing raster images. 1138 /// 1139 /// For example, to blur an image using [Canvas.drawImage], apply an 1140 /// [ImageFilter.blur]: 1141 /// 1142 /// ```dart 1143 /// import 'dart:ui' as ui; 1144 /// 1145 /// ui.Image image; 1146 /// 1147 /// void paint(Canvas canvas, Size size) { 1148 /// canvas.drawImage( 1149 /// image, 1150 /// Offset.zero, 1151 /// Paint()..imageFilter = ui.ImageFilter.blur(sigmaX: .5, sigmaY: .5), 1152 /// ); 1153 /// } 1154 /// ``` 1155 /// 1156 /// See also: 1157 /// 1158 /// * [MaskFilter], which is used for drawing geometry. 1159 ImageFilter get imageFilter { 1160 // TODO(flutter/flutter#35156): Implement ImageFilter. 1161 return null; 1162 } 1163 1164 set imageFilter(ImageFilter value) { 1165 // TODO(flutter/flutter#35156): Implement ImageFilter. 1166 } 1167 1168 // True if Paint instance has used in RecordingCanvas. 1169 bool _frozen = false; 1170 1171 // Marks this paint object as previously used. 1172 PaintData get webOnlyPaintData { 1173 // Flip bit so next time object gets mutated we create a clone of 1174 // current paint data. 1175 _frozen = true; 1176 return _paintData; 1177 } 1178 1179 @override 1180 String toString() { 1181 if (engine.assertionsEnabled) { 1182 final StringBuffer result = StringBuffer(); 1183 String semicolon = ''; 1184 result.write('Paint('); 1185 if (style == PaintingStyle.stroke) { 1186 result.write('$style'); 1187 if (strokeWidth != null && strokeWidth != 0.0) 1188 result.write(' $strokeWidth'); 1189 else 1190 result.write(' hairline'); 1191 if (strokeCap != null && strokeCap != StrokeCap.butt) 1192 result.write(' $strokeCap'); 1193 semicolon = '; '; 1194 } 1195 if (isAntiAlias != true) { 1196 result.write('${semicolon}antialias off'); 1197 semicolon = '; '; 1198 } 1199 if (color != _defaultPaintColor) { 1200 if (color != null) 1201 result.write('$semicolon$color'); 1202 else 1203 result.write('${semicolon}no color'); 1204 semicolon = '; '; 1205 } 1206 result.write(')'); 1207 return result.toString(); 1208 } else { 1209 return super.toString(); 1210 } 1211 } 1212} 1213 1214/// Base class for objects such as [Gradient] and [ImageShader] which 1215/// correspond to shaders as used by [Paint.shader]. 1216abstract class Shader { 1217 /// This class is created by the engine, and should not be instantiated 1218 /// or extended directly. 1219 Shader._(); 1220 1221 /// Creates a fill style to be used in painting. 1222 Object createPaintStyle(html.CanvasRenderingContext2D ctx); 1223 1224 List<dynamic> webOnlySerializeToCssPaint() { 1225 throw UnsupportedError('CSS paint not implemented for this shader type'); 1226 } 1227} 1228 1229/// A shader (as used by [Paint.shader]) that renders a color gradient. 1230/// 1231/// There are several types of gradients, represented by the various 1232/// constructors on this class. 1233abstract class Gradient extends Shader { 1234 Gradient._() : super._(); 1235 1236 /// Creates a linear gradient from `from` to `to`. 1237 /// 1238 /// If `colorStops` is provided, `colorStops[i]` is a number from 0.0 to 1.0 1239 /// that specifies where `color[i]` begins in the gradient. If `colorStops` is 1240 /// not provided, then only two stops, at 0.0 and 1.0, are implied (and 1241 /// `color` must therefore only have two entries). 1242 /// 1243 /// The behavior before `from` and after `to` is described by the `tileMode` 1244 /// argument. For details, see the [TileMode] enum. 1245 /// 1246 ///  1247 ///  1248 ///  1249 /// 1250 /// If `from`, `to`, `colors`, or `tileMode` are null, or if `colors` or 1251 /// `colorStops` contain null values, this constructor will throw a 1252 /// [NoSuchMethodError]. 1253 factory Gradient.linear( 1254 Offset from, 1255 Offset to, 1256 List<Color> colors, [ 1257 List<double> colorStops, 1258 TileMode tileMode = TileMode.clamp, 1259 Float64List 1260 matrix4, // TODO(flutter_web): see https://github.com/flutter/flutter/issues/32819 1261 ]) => 1262 _GradientLinear(from, to, colors, colorStops, tileMode); 1263 1264 /// Creates a radial gradient centered at `center` that ends at `radius` 1265 /// distance from the center. 1266 /// 1267 /// If `colorStops` is provided, `colorStops[i]` is a number from 0.0 to 1.0 1268 /// that specifies where `color[i]` begins in the gradient. If `colorStops` is 1269 /// not provided, then only two stops, at 0.0 and 1.0, are implied (and 1270 /// `color` must therefore only have two entries). 1271 /// 1272 /// The behavior before and after the radius is described by the `tileMode` 1273 /// argument. For details, see the [TileMode] enum. 1274 /// 1275 ///  1276 ///  1277 ///  1278 /// 1279 /// If `center`, `radius`, `colors`, or `tileMode` are null, or if `colors` or 1280 /// `colorStops` contain null values, this constructor will throw a 1281 /// [NoSuchMethodError]. 1282 /// 1283 /// If `matrix4` is provided, the gradient fill will be transformed by the 1284 /// specified 4x4 matrix relative to the local coordinate system. `matrix4` 1285 /// must be a column-major matrix packed into a list of 16 values. 1286 /// 1287 /// If `focal` is provided and not equal to `center` and `focalRadius` is 1288 /// provided and not equal to 0.0, the generated shader will be a two point 1289 /// conical radial gradient, with `focal` being the center of the focal 1290 /// circle and `focalRadius` being the radius of that circle. If `focal` is 1291 /// provided and not equal to `center`, at least one of the two offsets must 1292 /// not be equal to [Offset.zero]. 1293 factory Gradient.radial(Offset center, double radius, List<Color> colors, 1294 [List<double> colorStops, 1295 TileMode tileMode = TileMode.clamp, 1296 Float64List matrix4, 1297 Offset focal, 1298 double focalRadius = 0.0]) { 1299 focalRadius ??= 0.0; 1300 _validateColorStops(colors, colorStops); 1301 // If focal is null or focal radius is null, this should be treated as a regular radial gradient 1302 // If focal == center and the focal radius is 0.0, it's still a regular radial gradient 1303 if (focal == null || (focal == center && focalRadius == 0.0)) { 1304 return _GradientRadial( 1305 center, radius, colors, colorStops, tileMode, matrix4); 1306 } else { 1307 assert(center != Offset.zero || 1308 focal != Offset.zero); // will result in exception(s) in Skia side 1309 return _GradientConical(focal, focalRadius, center, radius, colors, 1310 colorStops, tileMode, matrix4); 1311 } 1312 } 1313 1314 /// Creates a sweep gradient centered at `center` that starts at `startAngle` 1315 /// and ends at `endAngle`. 1316 /// 1317 /// `startAngle` and `endAngle` should be provided in radians, with zero 1318 /// radians being the horizontal line to the right of the `center` and with 1319 /// positive angles going clockwise around the `center`. 1320 /// 1321 /// If `colorStops` is provided, `colorStops[i]` is a number from 0.0 to 1.0 1322 /// that specifies where `color[i]` begins in the gradient. If `colorStops` is 1323 /// not provided, then only two stops, at 0.0 and 1.0, are implied (and 1324 /// `color` must therefore only have two entries). 1325 /// 1326 /// The behavior before `startAngle` and after `endAngle` is described by the 1327 /// `tileMode` argument. For details, see the [TileMode] enum. 1328 /// 1329 ///  1330 ///  1331 ///  1332 /// 1333 /// If `center`, `colors`, `tileMode`, `startAngle`, or `endAngle` are null, 1334 /// or if `colors` or `colorStops` contain null values, this constructor will 1335 /// throw a [NoSuchMethodError]. 1336 /// 1337 /// If `matrix4` is provided, the gradient fill will be transformed by the 1338 /// specified 4x4 matrix relative to the local coordinate system. `matrix4` 1339 /// must be a column-major matrix packed into a list of 16 values. 1340 factory Gradient.sweep( 1341 Offset center, 1342 List<Color> colors, [ 1343 List<double> colorStops, 1344 TileMode tileMode = TileMode.clamp, 1345 double startAngle = 0.0, 1346 double endAngle = math.pi * 2, 1347 Float64List matrix4, 1348 ]) => 1349 _GradientSweep( 1350 center, colors, colorStops, tileMode, startAngle, endAngle, matrix4); 1351} 1352 1353class _GradientSweep extends Gradient { 1354 _GradientSweep(this.center, this.colors, this.colorStops, this.tileMode, 1355 this.startAngle, this.endAngle, this.matrix4) 1356 : assert(_offsetIsValid(center)), 1357 assert(colors != null), 1358 assert(tileMode != null), 1359 assert(startAngle != null), 1360 assert(endAngle != null), 1361 assert(startAngle < endAngle), 1362 assert(matrix4 == null || _matrix4IsValid(matrix4)), 1363 super._() { 1364 _validateColorStops(colors, colorStops); 1365 } 1366 1367 @override 1368 Object createPaintStyle(_) { 1369 throw UnimplementedError(); 1370 } 1371 1372 final Offset center; 1373 final List<Color> colors; 1374 final List<double> colorStops; 1375 final TileMode tileMode; 1376 final double startAngle; 1377 final double endAngle; 1378 final Float64List matrix4; 1379} 1380 1381void _validateColorStops(List<Color> colors, List<double> colorStops) { 1382 if (colorStops == null) { 1383 if (colors.length != 2) 1384 throw ArgumentError( 1385 '"colors" must have length 2 if "colorStops" is omitted.'); 1386 } else { 1387 if (colors.length != colorStops.length) 1388 throw ArgumentError( 1389 '"colors" and "colorStops" arguments must have equal length.'); 1390 } 1391} 1392 1393class _GradientLinear extends Gradient { 1394 _GradientLinear( 1395 this.from, 1396 this.to, 1397 this.colors, 1398 this.colorStops, 1399 this.tileMode, 1400 ) : assert(_offsetIsValid(from)), 1401 assert(_offsetIsValid(to)), 1402 assert(colors != null), 1403 assert(tileMode != null), 1404 super._() { 1405 _validateColorStops(colors, colorStops); 1406 } 1407 1408 final Offset from; 1409 final Offset to; 1410 final List<Color> colors; 1411 final List<double> colorStops; 1412 final TileMode tileMode; 1413 1414 @override 1415 html.CanvasGradient createPaintStyle(html.CanvasRenderingContext2D ctx) { 1416 final html.CanvasGradient gradient = 1417 ctx.createLinearGradient(from.dx, from.dy, to.dx, to.dy); 1418 if (colorStops == null) { 1419 assert(colors.length == 2); 1420 gradient.addColorStop(0, colors[0].toCssString()); 1421 gradient.addColorStop(1, colors[1].toCssString()); 1422 return gradient; 1423 } 1424 for (int i = 0; i < colors.length; i++) { 1425 gradient.addColorStop(colorStops[i], colors[i].toCssString()); 1426 } 1427 return gradient; 1428 } 1429 1430 @override 1431 List<dynamic> webOnlySerializeToCssPaint() { 1432 final List<dynamic> serializedColors = <dynamic>[]; 1433 for (int i = 0; i < colors.length; i++) { 1434 serializedColors.add(colors[i].toCssString()); 1435 } 1436 return <dynamic>[ 1437 1, 1438 from.dx, 1439 from.dy, 1440 to.dx, 1441 to.dy, 1442 serializedColors, 1443 colorStops, 1444 tileMode.index 1445 ]; 1446 } 1447} 1448 1449class _GradientRadial extends Gradient { 1450 _GradientRadial(this.center, this.radius, this.colors, this.colorStops, 1451 this.tileMode, this.matrix4) 1452 : super._(); 1453 1454 final Offset center; 1455 final double radius; 1456 final List<Color> colors; 1457 final List<double> colorStops; 1458 final TileMode tileMode; 1459 final Float64List matrix4; 1460 1461 @override 1462 Object createPaintStyle(_) { 1463 throw UnimplementedError(); 1464 } 1465} 1466 1467class _GradientConical extends Gradient { 1468 _GradientConical(this.focal, this.focalRadius, this.center, this.radius, 1469 this.colors, this.colorStops, this.tileMode, this.matrix4) 1470 : super._(); 1471 1472 final Offset focal; 1473 final double focalRadius; 1474 final Offset center; 1475 final double radius; 1476 final List<Color> colors; 1477 final List<double> colorStops; 1478 final TileMode tileMode; 1479 final Float64List matrix4; 1480 1481 @override 1482 Object createPaintStyle(_) { 1483 throw UnimplementedError(); 1484 } 1485} 1486 1487/// Opaque handle to raw decoded image data (pixels). 1488/// 1489/// To obtain an [Image] object, use [instantiateImageCodec]. 1490/// 1491/// To draw an [Image], use one of the methods on the [Canvas] class, such as 1492/// [Canvas.drawImage]. 1493abstract class Image { 1494 /// The number of image pixels along the image's horizontal axis. 1495 int get width; 1496 1497 /// The number of image pixels along the image's vertical axis. 1498 int get height; 1499 1500 /// Converts the [Image] object into a byte array. 1501 /// 1502 /// The [format] argument specifies the format in which the bytes will be 1503 /// returned. 1504 /// 1505 /// Returns a future that completes with the binary image data or an error 1506 /// if encoding fails. 1507 Future<ByteData> toByteData( 1508 {ImageByteFormat format = ImageByteFormat.rawRgba}); 1509 1510 /// Release the resources used by this object. The object is no longer usable 1511 /// after this method is called. 1512 void dispose(); 1513 1514 @override 1515 String toString() => '[$width\u00D7$height]'; 1516} 1517 1518/// A description of a color filter to apply when drawing a shape or compositing 1519/// a layer with a particular [Paint]. A color filter is a function that takes 1520/// two colors, and outputs one color. When applied during compositing, it is 1521/// independently applied to each pixel of the layer being drawn before the 1522/// entire layer is merged with the destination. 1523/// 1524/// Instances of this class are used with [Paint.colorFilter] on [Paint] 1525/// objects. 1526class ColorFilter { 1527 /// Creates a color filter that applies the blend mode given as the second 1528 /// argument. The source color is the one given as the first argument, and the 1529 /// destination color is the one from the layer being composited. 1530 /// 1531 /// The output of this filter is then composited into the background according 1532 /// to the [Paint.blendMode], using the output of this filter as the source 1533 /// and the background as the destination. 1534 const ColorFilter.mode(Color color, BlendMode blendMode) 1535 : _color = color, 1536 _blendMode = blendMode; 1537 1538 /// Construct a color filter that transforms a color by a 4x5 matrix. The 1539 /// matrix is in row-major order and the translation column is specified in 1540 /// unnormalized, 0...255, space. 1541 const ColorFilter.matrix(List<double> matrix) 1542 : _color = null, 1543 _blendMode = null; 1544 1545 /// Construct a color filter that applies the sRGB gamma curve to the RGB 1546 /// channels. 1547 const ColorFilter.linearToSrgbGamma() 1548 : _color = null, 1549 _blendMode = null; 1550 1551 /// Creates a color filter that applies the inverse of the sRGB gamma curve 1552 /// to the RGB channels. 1553 const ColorFilter.srgbToLinearGamma() 1554 : _color = null, 1555 _blendMode = null; 1556 1557 final Color _color; 1558 final BlendMode _blendMode; 1559 1560 @override 1561 bool operator ==(dynamic other) { 1562 if (other is! ColorFilter) return false; 1563 final ColorFilter typedOther = other; 1564 return _color == typedOther._color && _blendMode == typedOther._blendMode; 1565 } 1566 1567 @override 1568 int get hashCode => hashValues(_color, _blendMode); 1569 1570 List<dynamic> webOnlySerializeToCssPaint() { 1571 throw UnsupportedError('ColorFilter for CSS paint not yet supported'); 1572 } 1573 1574 @override 1575 String toString() => engine.assertionsEnabled 1576 ? 'ColorFilter($_color, $_blendMode)' 1577 : super.toString(); 1578} 1579 1580/// Styles to use for blurs in [MaskFilter] objects. 1581// These enum values must be kept in sync with SkBlurStyle. 1582enum BlurStyle { 1583 // These mirror SkBlurStyle and must be kept in sync. 1584 1585 /// Fuzzy inside and outside. This is useful for painting shadows that are 1586 /// offset from the shape that ostensibly is casting the shadow. 1587 normal, 1588 1589 /// Solid inside, fuzzy outside. This corresponds to drawing the shape, and 1590 /// additionally drawing the blur. This can make objects appear brighter, 1591 /// maybe even as if they were fluorescent. 1592 solid, 1593 1594 /// Nothing inside, fuzzy outside. This is useful for painting shadows for 1595 /// partially transparent shapes, when they are painted separately but without 1596 /// an offset, so that the shadow doesn't paint below the shape. 1597 outer, 1598 1599 /// Fuzzy inside, nothing outside. This can make shapes appear to be lit from 1600 /// within. 1601 inner, 1602} 1603 1604/// A mask filter to apply to shapes as they are painted. A mask filter is a 1605/// function that takes a bitmap of color pixels, and returns another bitmap of 1606/// color pixels. 1607/// 1608/// Instances of this class are used with [Paint.maskFilter] on [Paint] objects. 1609class MaskFilter { 1610 /// Creates a mask filter that takes the shape being drawn and blurs it. 1611 /// 1612 /// This is commonly used to approximate shadows. 1613 /// 1614 /// The `style` argument controls the kind of effect to draw; see [BlurStyle]. 1615 /// 1616 /// The `sigma` argument controls the size of the effect. It is the standard 1617 /// deviation of the Gaussian blur to apply. The value must be greater than 1618 /// zero. The sigma corresponds to very roughly half the radius of the effect 1619 /// in pixels. 1620 /// 1621 /// A blur is an expensive operation and should therefore be used sparingly. 1622 /// 1623 /// The arguments must not be null. 1624 /// 1625 /// See also: 1626 /// 1627 /// * [Canvas.drawShadow], which is a more efficient way to draw shadows. 1628 const MaskFilter.blur( 1629 this._style, 1630 this._sigma, 1631 ) : assert(_style != null), 1632 assert(_sigma != null); 1633 1634 final BlurStyle _style; 1635 final double _sigma; 1636 1637 /// On the web returns the value of sigma passed to [MaskFilter.blur]. 1638 double get webOnlySigma => _sigma; 1639 1640 @override 1641 bool operator ==(dynamic other) { 1642 if (other is! MaskFilter) { 1643 return false; 1644 } 1645 final MaskFilter typedOther = other; 1646 return _style == typedOther._style && _sigma == typedOther._sigma; 1647 } 1648 1649 @override 1650 int get hashCode => hashValues(_style, _sigma); 1651 1652 List<dynamic> webOnlySerializeToCssPaint() { 1653 return <dynamic>[_style?.index, _sigma]; 1654 } 1655 1656 @override 1657 String toString() => 'MaskFilter.blur($_style, ${_sigma.toStringAsFixed(1)})'; 1658} 1659 1660/// Quality levels for image filters. 1661/// 1662/// See [Paint.filterQuality]. 1663enum FilterQuality { 1664 // This list comes from Skia's SkFilterQuality.h and the values (order) should 1665 // be kept in sync. 1666 1667 /// Fastest possible filtering, albeit also the lowest quality. 1668 /// 1669 /// Typically this implies nearest-neighbour filtering. 1670 none, 1671 1672 /// Better quality than [none], faster than [medium]. 1673 /// 1674 /// Typically this implies bilinear interpolation. 1675 low, 1676 1677 /// Better quality than [low], faster than [high]. 1678 /// 1679 /// Typically this implies a combination of bilinear interpolation and 1680 /// pyramidal parametric prefiltering (mipmaps). 1681 medium, 1682 1683 /// Best possible quality filtering, albeit also the slowest. 1684 /// 1685 /// Typically this implies bicubic interpolation or better. 1686 high, 1687} 1688 1689/// A filter operation to apply to a raster image. 1690/// 1691/// See also: 1692/// 1693/// * [BackdropFilter], a widget that applies [ImageFilter] to its rendering. 1694/// * [SceneBuilder.pushBackdropFilter], which is the low-level API for using 1695/// this class. 1696class ImageFilter { 1697 /// Creates an image filter that applies a Gaussian blur. 1698 ImageFilter.blur({this.sigmaX = 0.0, this.sigmaY = 0.0}) 1699 : matrix4 = null, 1700 filterQuality = FilterQuality.low; 1701 1702 ImageFilter.matrix(this.matrix4, {this.filterQuality = FilterQuality.low}) 1703 : sigmaX = 0.0, 1704 sigmaY = 0.0 { 1705 // TODO(flutter_web): add implementation. 1706 throw UnimplementedError( 1707 'ImageFilter.matrix not implemented for web platform.'); 1708 // if (matrix4.length != 16) 1709 // throw ArgumentError('"matrix4" must have 16 entries.'); 1710 } 1711 1712 final Float64List matrix4; 1713 final FilterQuality filterQuality; 1714 final double sigmaX; 1715 final double sigmaY; 1716} 1717 1718/// The format in which image bytes should be returned when using 1719/// [Image.toByteData]. 1720enum ImageByteFormat { 1721 /// Raw RGBA format. 1722 /// 1723 /// Unencoded bytes, in RGBA row-primary form, 8 bits per channel. 1724 rawRgba, 1725 1726 /// Raw unmodified format. 1727 /// 1728 /// Unencoded bytes, in the image's existing format. For example, a grayscale 1729 /// image may use a single 8-bit channel for each pixel. 1730 rawUnmodified, 1731 1732 /// PNG format. 1733 /// 1734 /// A loss-less compression format for images. This format is well suited for 1735 /// images with hard edges, such as screenshots or sprites, and images with 1736 /// text. Transparency is supported. The PNG format supports images up to 1737 /// 2,147,483,647 pixels in either dimension, though in practice available 1738 /// memory provides a more immediate limitation on maximum image size. 1739 /// 1740 /// PNG images normally use the `.png` file extension and the `image/png` MIME 1741 /// type. 1742 /// 1743 /// See also: 1744 /// 1745 /// * <https://en.wikipedia.org/wiki/Portable_Network_Graphics>, the Wikipedia page on PNG. 1746 /// * <https://tools.ietf.org/rfc/rfc2083.txt>, the PNG standard. 1747 png, 1748} 1749 1750/// The format of pixel data given to [decodeImageFromPixels]. 1751enum PixelFormat { 1752 /// Each pixel is 32 bits, with the highest 8 bits encoding red, the next 8 1753 /// bits encoding green, the next 8 bits encoding blue, and the lowest 8 bits 1754 /// encoding alpha. 1755 rgba8888, 1756 1757 /// Each pixel is 32 bits, with the highest 8 bits encoding blue, the next 8 1758 /// bits encoding green, the next 8 bits encoding red, and the lowest 8 bits 1759 /// encoding alpha. 1760 bgra8888, 1761} 1762 1763class _ImageInfo { 1764 _ImageInfo(this.width, this.height, this.format, this.rowBytes) { 1765 rowBytes ??= width * 4; 1766 } 1767 int width; 1768 int height; 1769 int format; 1770 int rowBytes; 1771} 1772 1773/// Callback signature for [decodeImageFromList]. 1774typedef ImageDecoderCallback = void Function(Image result); 1775 1776/// Information for a single frame of an animation. 1777/// 1778/// To obtain an instance of the [FrameInfo] interface, see 1779/// [Codec.getNextFrame]. 1780abstract class FrameInfo { 1781 /// This class is created by the engine, and should not be instantiated 1782 /// or extended directly. 1783 /// 1784 /// To obtain an instance of the [FrameInfo] interface, see 1785 /// [Codec.getNextFrame]. 1786 FrameInfo._(); 1787 1788 /// The duration this frame should be shown. 1789 Duration get duration => Duration(milliseconds: _durationMillis); 1790 int get _durationMillis => 0; 1791 1792 /// The [Image] object for this frame. 1793 Image get image => null; 1794} 1795 1796/// A handle to an image codec. 1797class Codec { 1798 /// This class is created by the engine, and should not be instantiated 1799 /// or extended directly. 1800 /// 1801 /// To obtain an instance of the [Codec] interface, see 1802 /// [instantiateImageCodec]. 1803 Codec._(); 1804 1805 /// Number of frames in this image. 1806 int get frameCount => 0; 1807 1808 /// Number of times to repeat the animation. 1809 /// 1810 /// * 0 when the animation should be played once. 1811 /// * -1 for infinity repetitions. 1812 int get repetitionCount => 0; 1813 1814 /// Fetches the next animation frame. 1815 /// 1816 /// Wraps back to the first frame after returning the last frame. 1817 /// 1818 /// The returned future can complete with an error if the decoding has failed. 1819 Future<FrameInfo> getNextFrame() { 1820 return engine.futurize(_getNextFrame); 1821 } 1822 1823 /// Returns an error message on failure, null on success. 1824 String _getNextFrame(engine.Callback<FrameInfo> callback) => null; 1825 1826 /// Release the resources used by this object. The object is no longer usable 1827 /// after this method is called. 1828 void dispose() {} 1829} 1830 1831/// Instantiates an image codec [Codec] object. 1832/// 1833/// [list] is the binary image data (e.g a PNG or GIF binary data). 1834/// The data can be for either static or animated images. 1835/// 1836/// The following image formats are supported: {@macro flutter.dart:ui.imageFormats} 1837/// 1838/// The returned future can complete with an error if the image decoding has 1839/// failed. 1840Future<Codec> instantiateImageCodec(Uint8List list, 1841 {double decodedCacheRatioCap = double.infinity}) { 1842 return engine.futurize((engine.Callback<Codec> callback) => 1843 _instantiateImageCodec(list, callback, null)); 1844} 1845 1846/// Instantiates a [Codec] object for an image binary data. 1847/// 1848/// Returns an error message if the instantiation has failed, null otherwise. 1849String _instantiateImageCodec( 1850 Uint8List list, engine.Callback<Codec> callback, _ImageInfo imageInfo) { 1851 final html.Blob blob = html.Blob(<dynamic>[list.buffer]); 1852 callback(engine.HtmlBlobCodec(blob)); 1853 return null; 1854} 1855 1856Future<Codec> webOnlyInstantiateImageCodecFromUrl(Uri uri) { 1857 return engine.futurize((engine.Callback<Codec> callback) => 1858 _instantiateImageCodecFromUrl(uri, callback)); 1859} 1860 1861String _instantiateImageCodecFromUrl(Uri uri, engine.Callback<Codec> callback) { 1862 callback(engine.HtmlCodec(uri.toString())); 1863 return null; 1864} 1865 1866/// Loads a single image frame from a byte array into an [Image] object. 1867/// 1868/// This is a convenience wrapper around [instantiateImageCodec]. 1869/// Prefer using [instantiateImageCodec] which also supports multi frame images. 1870void decodeImageFromList(Uint8List list, ImageDecoderCallback callback) { 1871 _decodeImageFromListAsync(list, callback); 1872} 1873 1874Future<void> _decodeImageFromListAsync( 1875 Uint8List list, ImageDecoderCallback callback) async { 1876 final Codec codec = await instantiateImageCodec(list); 1877 final FrameInfo frameInfo = await codec.getNextFrame(); 1878 callback(frameInfo.image); 1879} 1880 1881/// Convert an array of pixel values into an [Image] object. 1882/// 1883/// [pixels] is the pixel data in the encoding described by [format]. 1884/// 1885/// [rowBytes] is the number of bytes consumed by each row of pixels in the 1886/// data buffer. If unspecified, it defaults to [width] multipled by the 1887/// number of bytes per pixel in the provided [format]. 1888void decodeImageFromPixels(Uint8List pixels, int width, int height, 1889 PixelFormat format, ImageDecoderCallback callback, 1890 {int rowBytes}) { 1891 final _ImageInfo imageInfo = 1892 _ImageInfo(width, height, format.index, rowBytes); 1893 final Future<Codec> codecFuture = engine.futurize( 1894 (engine.Callback<Codec> callback) => 1895 _instantiateImageCodec(pixels, callback, imageInfo)); 1896 codecFuture 1897 .then((Codec codec) => codec.getNextFrame()) 1898 .then((FrameInfo frameInfo) => callback(frameInfo.image)); 1899} 1900 1901/// A single shadow. 1902/// 1903/// Multiple shadows are stacked together in a [TextStyle]. 1904class Shadow { 1905 /// Construct a shadow. 1906 /// 1907 /// The default shadow is a black shadow with zero offset and zero blur. 1908 /// Default shadows should be completely covered by the casting element, 1909 /// and not be visble. 1910 /// 1911 /// Transparency should be adjusted through the [color] alpha. 1912 /// 1913 /// Shadow order matters due to compositing multiple translucent objects not 1914 /// being commutative. 1915 const Shadow({ 1916 this.color = const Color(_kColorDefault), 1917 this.offset = Offset.zero, 1918 this.blurRadius = 0.0, 1919 }) : assert(color != null, 'Text shadow color was null.'), 1920 assert(offset != null, 'Text shadow offset was null.'), 1921 assert(blurRadius >= 0.0, 1922 'Text shadow blur radius should be non-negative.'); 1923 1924 static const int _kColorDefault = 0xFF000000; 1925 1926 /// Color that the shadow will be drawn with. 1927 /// 1928 /// The shadows are shapes composited directly over the base canvas, and do not 1929 /// represent optical occlusion. 1930 final Color color; 1931 1932 /// The displacement of the shadow from the casting element. 1933 /// 1934 /// Positive x/y offsets will shift the shadow to the right and down, while 1935 /// negative offsets shift the shadow to the left and up. The offsets are 1936 /// relative to the position of the element that is casting it. 1937 final Offset offset; 1938 1939 /// The standard deviation of the Gaussian to convolve with the shadow's shape. 1940 final double blurRadius; 1941 1942 /// Converts a blur radius in pixels to sigmas. 1943 /// 1944 /// See the sigma argument to [MaskFilter.blur]. 1945 /// 1946 // See SkBlurMask::ConvertRadiusToSigma(). 1947 // <https://github.com/google/skia/blob/bb5b77db51d2e149ee66db284903572a5aac09be/src/effects/SkBlurMask.cpp#L23> 1948 static double convertRadiusToSigma(double radius) { 1949 return radius * 0.57735 + 0.5; 1950 } 1951 1952 /// The [blurRadius] in sigmas instead of logical pixels. 1953 /// 1954 /// See the sigma argument to [MaskFilter.blur]. 1955 double get blurSigma => convertRadiusToSigma(blurRadius); 1956 1957 /// Create the [Paint] object that corresponds to this shadow description. 1958 /// 1959 /// The [offset] is not represented in the [Paint] object. 1960 /// To honor this as well, the shape should be translated by [offset] before 1961 /// being filled using this [Paint]. 1962 /// 1963 /// This class does not provide a way to disable shadows to avoid inconsistencies 1964 /// in shadow blur rendering, primarily as a method of reducing test flakiness. 1965 /// [toPaint] should be overriden in subclasses to provide this functionality. 1966 Paint toPaint() { 1967 return Paint() 1968 ..color = color 1969 ..maskFilter = MaskFilter.blur(BlurStyle.normal, blurSigma); 1970 } 1971 1972 /// Returns a new shadow with its [offset] and [blurRadius] scaled by the given 1973 /// factor. 1974 Shadow scale(double factor) { 1975 return Shadow( 1976 color: color, 1977 offset: offset * factor, 1978 blurRadius: blurRadius * factor, 1979 ); 1980 } 1981 1982 /// Linearly interpolate between two shadows. 1983 /// 1984 /// If either shadow is null, this function linearly interpolates from a 1985 /// a shadow that matches the other shadow in color but has a zero 1986 /// offset and a zero blurRadius. 1987 /// 1988 /// {@template dart.ui.shadow.lerp} 1989 /// The `t` argument represents position on the timeline, with 0.0 meaning 1990 /// that the interpolation has not started, returning `a` (or something 1991 /// equivalent to `a`), 1.0 meaning that the interpolation has finished, 1992 /// returning `b` (or something equivalent to `b`), and values in between 1993 /// meaning that the interpolation is at the relevant point on the timeline 1994 /// between `a` and `b`. The interpolation can be extrapolated beyond 0.0 and 1995 /// 1.0, so negative values and values greater than 1.0 are valid (and can 1996 /// easily be generated by curves such as [Curves.elasticInOut]). 1997 /// 1998 /// Values for `t` are usually obtained from an [Animation<double>], such as 1999 /// an [AnimationController]. 2000 /// {@endtemplate} 2001 static Shadow lerp(Shadow a, Shadow b, double t) { 2002 assert(t != null); 2003 if (a == null && b == null) { 2004 return null; 2005 } 2006 if (a == null) { 2007 return b.scale(t); 2008 } 2009 if (b == null) { 2010 return a.scale(1.0 - t); 2011 } 2012 return Shadow( 2013 color: Color.lerp(a.color, b.color, t), 2014 offset: Offset.lerp(a.offset, b.offset, t), 2015 blurRadius: lerpDouble(a.blurRadius, b.blurRadius, t), 2016 ); 2017 } 2018 2019 /// Linearly interpolate between two lists of shadows. 2020 /// 2021 /// If the lists differ in length, excess items are lerped with null. 2022 /// 2023 /// {@macro dart.ui.shadow.lerp} 2024 static List<Shadow> lerpList(List<Shadow> a, List<Shadow> b, double t) { 2025 assert(t != null); 2026 if (a == null && b == null) { 2027 return null; 2028 } 2029 a ??= <Shadow>[]; 2030 b ??= <Shadow>[]; 2031 final List<Shadow> result = <Shadow>[]; 2032 final int commonLength = math.min(a.length, b.length); 2033 for (int i = 0; i < commonLength; i += 1) 2034 result.add(Shadow.lerp(a[i], b[i], t)); 2035 for (int i = commonLength; i < a.length; i += 1) 2036 result.add(a[i].scale(1.0 - t)); 2037 for (int i = commonLength; i < b.length; i += 1) { 2038 result.add(b[i].scale(t)); 2039 } 2040 return result; 2041 } 2042 2043 @override 2044 bool operator ==(dynamic other) { 2045 if (identical(this, other)) { 2046 return true; 2047 } 2048 if (other is! Shadow) { 2049 return false; 2050 } 2051 final Shadow typedOther = other; 2052 return color == typedOther.color && 2053 offset == typedOther.offset && 2054 blurRadius == typedOther.blurRadius; 2055 } 2056 2057 @override 2058 int get hashCode => hashValues(color, offset, blurRadius); 2059 2060 @override 2061 String toString() => 'TextShadow($color, $offset, $blurRadius)'; 2062} 2063